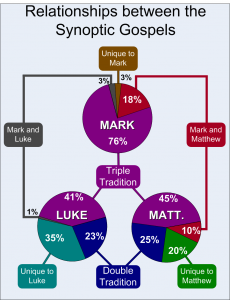

Two posts ago, I talked about one big change I made to my Introduction to the New Testament class last summer, choosing to take the students through the Synoptic Gospels before teaching the Synoptic Problem itself. That change seemed immensely helpful, as it took an important (but typically uninteresting to the students) subject and forced the students to see the problem before the theoretical solutions. Another change I made was more administrative and took some time to set up but will now be a feature of all my future Introduction to New Testament courses: using Respondus, I established a test bank of around 1,500 questions that I can import into any course management system out there (Blackboard, Sakai, etc.). I used this test bank to create regular online (timed) quizzes on Blackboard to accompany the reading, quizzes that auto graded and instantly gave me what percentage of students got a given question right or wrong, giving some insight into whether I should spend a little extra time on a given point in class.

These questions are largely multiple-choice, but they also include matching, fill-in-the-blank, and other objective question structures. Although in most subjects I am something of a critic of multiple-choice questions, I think they can actually be very effective in New Testament (and Hebrew Bible) introductory courses if written properly. For example, I am a big proponent of “verse identification” questions, which ask students to identify which book a given verse is from. If a student can identify that the verse including “thus he made all things clean” is from the Gospel of Mark, it indicates that the student has actually processed some important thematic issues within the Gospels. Essentially, my goal is to force “essay level reflection for multiple choice questions,” asking questions that force students to think about why a given verse must be from a given book rather than another. I also do thematic questions (e.g. “which Gospel portrays Jesus as especially concerned with the poor?”) and other similar objective questions that require students to have understood the essence of what has been covered in the class. Then of course there are actual historical/data questions, asking about, say, the Pharisees or Alexander the Great. These sorts of questions, taken together, can really give a good picture of whether a student has grasped the material necessary for the course. (That the students came out to an average in the low “B” range with a median in the B+ range—which is about where I as an opponent of grade inflation would generally like them to wind up—was also a pleasant surprise.)

I also used these question banks—which included a pool of essay questions—to construct the midterm and final examinations, which (aside from the essays) auto graded and again gave instant access to student performance data on a per-question basis. Using automated tests both reduced my time grading and gave easier access to better assessment data, a win-win proposition. The students also generally found this arrangement preferable to other testing and assessment options. They did request that the essay portion of the exam be separate from the rest of the questions for the final exam, as the randomized question structure had thrown the essays into the mix at awkward times on the midterm. To address this issue, I simply created two separate exams—one essay, one with objective questions—that together made up the final exam.

The other advantage to putting in the extra time to create these test pools is the reduction in future test and quiz creation time for future courses. Because the test pools are so large and include a range of questions for each section of the course (and because the exams can be randomized), I can give different exams every semester with very little prep time. I do still have some additional work left to polish the pools (I’d like to group and keyword them for adaptive testing in the future), but the time I’ll have to spend on assessment in the future has been greatly reduced. As I teach Hebrew Bible as well, I intend to do the same for that class and ultimately all the introductory courses I teach, effectively automating the bulk of assessment for my introductory courses. This should afford me more time to research and focus on the actual pedagogy in the classroom while also giving better data on student performance. Sometimes the move to computers really does make things smoother.

A few caviats: UNC requires students to have a notebook computer, meaning I could require students to bring a computer to class for these assessments (the quizzes were generally timed quizzes to be taken at home). At institutions where this is not the case, this approach would naturally be more difficult to execute. Learning disabled students also present a special problem in this approach, as separate exams with different timing requirements must typically be created for those students, and it’s a little bit of extra work to get those exams to feed into the right grade column if you use the online gradebook on Blackboard (Sakai’s online assessment and gradebook functions are still pretty limited as well, making this even more difficult on Sakai). Finally, the other potential pitfall is that if you don’t have access to a tool like LockDown Browser (Carolina does not have access, for example), students can potentially use Google or other online tools to cheat rather easily. That’s why I put a time limit on the at-home quizzes (but this is problematic given the advantage LD students have with double the time—typically plenty of time to cheat on these quizzes). In the classroom, I simply require that they keep their browser maximized and open to the test window, while I sit in the back of the classroom—any change of screen should thus stick out pretty clearly. It’s not a perfect system, but I think it’s at least preferable to the old pen-and-paper method.

3 Comments. Leave new

UNC does have a Lockdown Browser, or at least they did my freshman year. We used it for a film crit final – might be worth looking into.

To my knowledge they don’t have an institutional license for it anymore, but I could be wrong. I tried getting it for my class in the summer but wasn’t able to.

[…] rest for those in academia (grad school). Jason Staples writes about how he has changed how his tests his NT students. Roland Boer has been providing a hilarious list and description of “Types of […]